Graph and Tensor Complexity

I have introduced native measures of universal complexity i.e. independent of description language and selected feature(s). This was by way of algorithmic probability based on

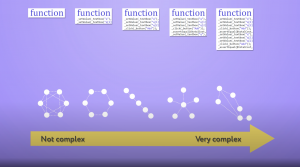

Designing the tools to navigate software space

(1) The introduction and calculation of a resource-bounded version of a measure inspired by algorithmic probability that has enabled:

(a) a first estimation of an empirical distribution of the so-called Universal Distribution or Levin’s semi-measure. The uncertainty of the estimation arises from its uncomputability, the lack of knowledge of the convergence rate and the optimality of the model used. However, among our contributions is the construction of distributions from completely different models of computation, showing a convergence of high frequency elements and therefore a relatively robust measure,

(b) the performance of an actual experiment related to an epistemological notion of simplicity conducted for the first time and related to the old philosophical concept of Ockham’s razor, in connection to a characterisation by algorithmic complexity, showing that both this formalisation and the experimental results conform with Ockham’s and theoretical expectations of both the notion (tested against the intuitive expectation and other measures) and the theories themselves (consistent with algorithmic complexity and algorithmic probability).

(c) a solution to the problem of estimating the algorithmic complexity of short strings (or at least their rank relative to each other), something that statistical compression algorithms had failed to solve or shed light upon (as they collapse most elements into the same compressed length values) hence providing not only new means toward a more coarse-grained measure but also enabling a wider number of applications that require manipulation of small objects such as in perturbation analysis (key to causal discovery).

(d) the introduction of a native multi-dimensional measure of tensor algorithmic complexity based on n-dimensional models of computation (e.g. turmites in 2D).

(2) based on (1), the introduction of what we call the Coding Theorem Method (and its extension, the Block Decomposition Method), the only alternative to statistical compression (such as LZW) in applications of algorithmic complexity, that is today used and cited in dozens of applications in science, ranging from psychometrics to molecular biology.

(3) One of these applications included the conduction of an ‘inverse Turing test’ experiment with more than three thousand humans ranging from the age of 10 to 70 where we were able to prove that cognitive evolution can be captured by our measure. We asked humans to produce randomness that could be tested against randomness produced by computable means according to algorithmic probability. The results could not be reproduced with any other traditional measure and showed that cognitive abilities picked at 25, and cognitive decline started at age 60 consistent with the knowledge from the literature in the area but without the need to use any other measure or data traditionally used.

(4) enabled by (2) but independent of it, the application of algorithmic complexity and algorithmic probability to dynamical systems and to the foundations of artificial intelligence, going beyond the purely theoretical as other authors have managed to some extent, leading to, for example, disproving previous assumptions related to e.g. the power of deep learning allegedly based on differentiability,

(5) the creation and introduction (together with my brilliant collaborators), of the field of Algorithmic Information Dynamics, a discrete calculus in software space that combines algorithmic complexity and perturbation analysis to navigate between the physical world and software space (as in the Matrix) to conduct guided searches (in the space of all computer programs) so as to reveal computable (generative and mechanistic) models able to explain observations made in the the (apparent) real world, thereby enabling automated causal and scientific discovery.

(6) the introduction of methods and definitions of asymptotic statistical evidence of Turing universality, the application of which has led to evidence suggesting pervasive computational universality among the space of all computer programs.

Contributions 1 and 2 are relevant because, while not the ultimate solution, the methods introduced are the only ones currently able to practically extend the capabilities of approaches based on statistical compression algorithms that have been used (and abused) for purposes mostly related to the categorisation of static objects showing repetitions. Among these extended capabilities are those dealing with (a) small objects (like strings) that are fundamental in areas such as perturbation analysis and molecular biology and (b) recursive properties of objects and systems that statistical compression algorithms would completely overlook.

Contribution 3 is relevant because we have been able to show, for example, how modularity can be an emergent property (e.g. genes) of an algorithmic search in software space, how the most likely genetic pathways of cancer (genetic changes) can be inferred, and how patterns in structure and function in DNA can help us understand genetic regulation.

Other specific contributions are explained below.

Nature, the top-ranked journal, produced this video (by their own initiative) to explain my research on causal deconvolution based on algorithmic probability, based on our paper published in the same journal:

I was invited to write an essay on Computing in the Year 2065 for a new Springer book edited by Prof. Andrew Adamatzky. My contribution is titled Reprogramming Matter, Life, and Purpose of which first draft can be read here. I was also invited to write about how to be unconventional in science which I did under the title Paths to Unconventional Computing: Causality in Complexity, available here.

I have introduced native measures of universal complexity i.e. independent of description language and selected feature(s). This was by way of algorithmic probability based on

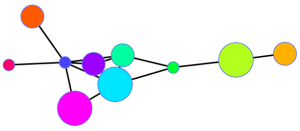

To illustrate the problem of ad-hoc measures I constructed a network whose node degrees follows the so-called Champernowne artificially-constructed Borel normal constant thus indicating maximal entropy (and maximal

To objectively characterise an object is a major challenge in science. Few measures have the capabilities to do so, in particular, those that are algorithmic

I organized and moderated what I think will historically be among some of the most interesting public discussions of some of the most brilliant minds

Because data of interest is usually, if not always, produced by mechanistic and algorithmic causes, I have proposed an empirical Bayesian approach to science where

In my personal quest to understand the process of, and the sources of creativity, I have explored apparently disparate areas, such as cooking. I have

This picture, titled “Visualising the Computational Universe: Runtime Space in a Peano Curve,” won me 1st. prize in the Computational Imagery category of the “2011 Kroto Institute Scientific Image Competition” held in the UK. Here is the explanation: It represents a small slice of the computational universe of all possible computer programs.

Each pixel represents a computer program with empty input running on an abstract computer (a Turing machine). The color indicates the runtime of the program upon completion of its computation. The darker a pixel the longer the program took to produce its output. White pixels are computer programs that do not produce any output because they never halt (just as happens when your computer stalls forever, sometimes making you reboot).

Red pixels are the computer programs that take the longest time—relative to other programs of the same size—before finishing (they are also called Busy Beavers). I call this color type coding the computability spectrum. Programs were sorted by size from smallest to largest (in terms of number of instructions) in such a way that one could be certain that any program would eventually be reached (including versions of MS Windows and Angry Birds that can be encoded as one-run computer programs).

Because there is no upper limit to the size of programs, the space of all computer programs is infinite but discrete, perhaps mirroring our own world. Because an enumeration of computer programs is linear (you start with program 1, then proceed to program 2, and so on) pixels depicted in this 2-dimensional figure are disposed along a “space-filling” Peano curve. This is a curve that guarantees that 2 contiguous elements in the enumeration remain as close as possible to each other in the 2D configuration.

The picture has the striking particularity that it is ultimately uncomputable. Imagine you point a telescope and take a picture of an object in the computational universe for which some pixel values cannot be determined, not only in practice but in principle– that is, no matter how good your camera, or how many ‘terapixels’ some future version of it may have. This slide of the computational universe was only possible because it is a tiny region of small computer programs for which all pixels can be determined by zooming in deep enough.

However, Gödel and Turing proved that if you zoomed out to a larger view of the computational universe, the picture would begin to look completely blurry because pixel colours would not be determinable. In other words this picture is the inverse equivalent of Hubble’s Deep Field space–the larger the space field the blurrier.

While we can only have access to the visible universe determined by the speed of light, we can only see a small part of the computational universe due to similar limitations both physical and computational (it seems we cannot build more powerful computers than the ones we already have to overcome these limitations). Hence, most of the computational space will remain unknowable, not only due to a limitation of resources but due to a fundamental barrier established in the digital world and extending into our own reality.

Only incremental improvements to this picture are possible. While the picture is ultimately uncomputable and unattainable, one can make an informed guess as to what it would look like if zooming out. The theory predicts the average color of the picture at a greater scale, this theory being the theory of algorithmic probability, closely related to what is known as the Chaitin Omega number, or the halting probability. It turns out that the picture will be mostly white, as in an ever expanding universe, finding computer programs that halt and produce something will be increasingly harder. Indeed, most programs will never halt, and those that halt will do so relatively quickly in proportion to their size (see paper P10).

Algorithmic probability also tells us that most programs will produce an output following exponential decay (-log_2 Pr(s)) hence most of them will produce the same strings and the fewest will produce algorithmically random digital sequences. In other words, among the computer programs that do produce an output, most of them will be highly ordered, as if they were creating a structured digital universe of highly ordered patterns out of nothing (just like ours). Some of these programs that produce the same output may do so faster than others, but precisely how much faster a program can be is another open foundational question and one of the greatest challenges in mathematics and computer science. If it were possible to find a program considerably faster than any other, then everything could be computed in ‘reasonable’ time, regardless of the apparent difficulty of the computation.

A full technical explanation of some of this and of how the image was generated was published in this paper:

H. Zenil, From Computer Runtimes to the Length of Proofs: With an Algorithmic Probabilistic Application to Waiting Times in Automatic Theorem Proving In M.J. Dinneen, B. Khousainov, and A. Nies (Eds.), Computation, Physics and Beyond International Workshop on Theoretical Computer Science, WTCS 2012, LNCS 7160, pp. 223-240, Springer, 2012.

A preprint is available online here.